pages/blog/disinfo.md (view raw)

1---

2template: text.html

3title: Disinformation demystified

4subtitle: Misinformation, but deliberate

5date: 2019-09-10

6slug: disinfo

7---

8

9As with the disambiguation of any word, let's start with its etymology and definiton.

10According to [Wikipedia](https://en.wikipedia.org/wiki/Disinformation),

11_disinformation_ has been borrowed from the Russian word -- _dezinformatisya_ (дезинформа́ция),

12derived from the title of a KGB black propaganda department.

13

14> Disinformation is false information spread deliberately to deceive.

15

16To fully understand disinformation, especially in the modern age, we need to understand the

17key factors of any successful disinformation operation:

18

19- creating disinformation (what)

20- the motivation behind the op, or its end goal (why)

21- the medium used to disperse the falsified information (how)

22- the actor (who)

23

24At the end, we'll also look at how you can use disinformation techniques to maintain OPSEC.

25

26In order to break monotony, I will also be using the terms "information operation", or the shortened

27forms -- "info op" & "disinfo".

28

29## Creating disinformation

30

31Crafting or creating disinformation is by no means a trivial task. Often, the quality

32of any disinformation sample is a huge indicator of the level of sophistication of the

33actor involved, i.e. is it a 12 year old troll or a nation state?

34

35Well crafted disinformation always has one primary characteristic -- "plausibility".

36The disinfo must sound reasonable. It must induce the notion it's _likely_ true.

37To achieve this, the target -- be it an individual, a specific demographic or an entire

38nation -- must be well researched. A deep understanding of the target's culture, history,

39geography and psychology is required. It also needs circumstantial and situational awareness,

40of the target.

41

42There are many forms of disinformation. A few common ones are staged videos / photographs,

43recontextualized videos / photographs, blog posts, news articles & most recently -- deepfakes.

44

45Here's a tweet from [the grugq](https://twitter.com/thegrugq), showing a case of recontextualized

46imagery:

47

48<blockquote class="twitter-tweet" data-dnt="true" data-theme="dark" data-link-color="#00ffff">

49<p lang="en" dir="ltr">Disinformation.

50<br><br>

51The content of the photo is not fake. The reality of what it captured is fake. The context it’s placed in is fake. The picture itself is 100% authentic. Everything, except the photo itself, is fake.

52<br><br>Recontextualisation as threat vector.

53<a href="https://t.co/Pko3f0xkXC">pic.twitter.com/Pko3f0xkXC</a>

54</p>— thaddeus e. grugq (@thegrugq)

55<a href="https://twitter.com/thegrugq/status/1142759819020890113?ref_src=twsrc%5Etfw">June 23, 2019</a>

56</blockquote>

57<script async src="https://platform.twitter.com/widgets.js" charset="utf-8"></script>

58

59## Motivations behind an information operation

60

61I like to broadly categorize any info op as either proactive or reactive.

62Proactively, disinformation is spread with the desire to influence the target

63either before or during the occurence of an event. This is especially observed

64during elections.[^1]

65In offensive information operations, the target's psychological state can be affected by

66spreading **fear, uncertainty & doubt**, or FUD for short.

67

68Reactive disinformation is when the actor, usually a nation state in this case,

69screws up and wants to cover their tracks. A fitting example of this is the case

70of Malaysian Airlines Flight 17 (MH17), which was shot down while flying over

71eastern Ukraine. This tragic incident has been attributed to Russian-backed

72separatists.[^2]

73Russian media is known to have desseminated a number of alternative & some even

74conspiratorial theories[^3], in response. The number grew as the JIT's (Dutch-lead Joint

75Investigation Team) investigations pointed towards the separatists.

76The idea was to **muddle the information** space with these theories, and as a result,

77potentially correct information takes a credibility hit.

78

79Another motive for an info op is to **control the narrative**. This is often seen in use

80in totalitarian regimes; when the government decides what the media portrays to the

81masses. The ongoing Hong Kong protests is a good example.[^4] According to [NPR](https://www.npr.org/2019/08/14/751039100/china-state-media-present-distorted-version-of-hong-kong-protests):

82

83> Official state media pin the blame for protests on the "black hand" of foreign interference,

84> namely from the United States, and what they have called criminal Hong Kong thugs.

85> A popular conspiracy theory posits the CIA incited and funded the Hong Kong protesters,

86> who are demanding an end to an extradition bill with China and the ability to elect their own leader.

87> Fueling this theory, China Daily, a state newspaper geared toward a younger, more cosmopolitan audience,

88> this week linked to a video purportedly showing Hong Kong protesters using American-made grenade launchers to combat police.

89> ...

90

91

92## Media used to disperse disinfo

93

94As seen in the above example of totalitarian governments, national TV and newspaper agencies

95play a key role in influence ops en masse. It guarantees outreach due to the channel/paper's

96popularity.

97

98Twitter is another, obvious example. Due to the ease of creating accounts and the ability to

99generate activity programmatically via the API, Twitter bots are the go-to choice today for

100info ops. Essentially, an actor attempts to create "discussions" amongst "users" (read: bots),

101to push their narrative(s). Twitter also provides analytics for every tweet, enabling actors to

102get realtime insights into what sticks and what doesn't.

103The use of Twitter was seen during the previously discussed MH17 case, where Russia employed its troll

104factory -- the [Internet Research Agency](https://en.wikipedia.org/wiki/Internet_Research_Agency) (IRA)

105to create discussions about alternative theories.

106

107In India, disinformation is often spread via YouTube, WhatsApp and Facebook. Political parties

108actively invest in creating group chats to spread political messages and memes. These parties

109have volunteers whose sole job is to sit and forward messages.

110Apart from political propaganda, WhatsApp finds itself as a medium of fake news. In most cases,

111this is disinformation without a motive, or the motive is hard to determine simply because

112the source is impossible to trace, lost in forwards.[^5]

113This is a difficult problem to combat, especially given the nature of the target audience.

114

115## The actors behind disinfo campaigns

116

117I doubt this requires further elaboration, but in short:

118

119- nation states and their intelligence agencies

120- governments, political parties

121- other non/quasi-governmental groups

122- trolls

123

124This essentially sums up the what, why, how and who of disinformation.

125

126## Personal OPSEC

127

128This is a fun one. Now, it's common knowledge that

129**STFU is the best policy**. But sometimes, this might not be possible, because

130afterall inactivity leads to suspicion, and suspicion leads to scrutiny. Which might

131lead to your OPSEC being compromised.

132So if you really have to, you can feign activity using disinformation. For example,

133pick a place, and throw in subtle details pertaining to the weather, local events

134or regional politics of that place into your disinfo. Assuming this is Twitter, you can

135tweet stuff like:

136

137- "Ugh, when will this hot streak end?!"

138- "Traffic wonky because of the Mardi Gras parade."

139- "Woah, XYZ place is nice! Especially the fountains by ABC street."

140

141Of course, if you're a nobody on Twitter (like me), this is a non-issue for you.

142

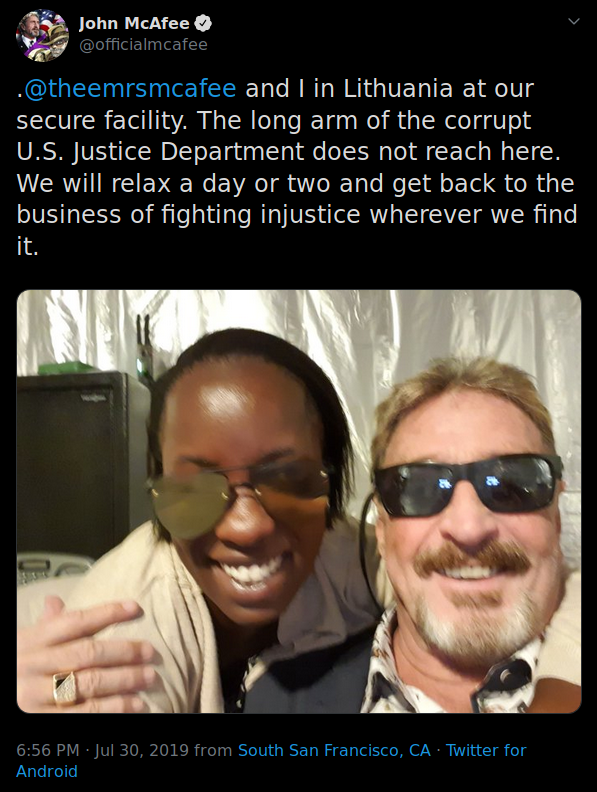

143And please, don't do this:

144

145

146

147## Conclusion

148

149The ability to influence someone's decisions/thought process in just one tweet is

150scary. There is no simple way to combat disinformation. Social media is hard to control.

151Just like anything else in cyber, this too is an endless battle between social media corps

152and motivated actors.

153

154A huge shoutout to Bellingcat for their extensive research in this field, and for helping

155folks see the truth in a post-truth world.

156

157[^1]: [This](https://www.vice.com/en_us/article/ev3zmk/an-expert-explains-the-many-ways-our-elections-can-be-hacked) episode of CYBER talks about election influence ops (features the grugq!).

158[^2]: The [Bellingcat Podcast](https://www.bellingcat.com/category/resources/podcasts/)'s season one covers the MH17 investigation in detail.

159[^3]: [Wikipedia section on MH17 conspiracy theories](https://en.wikipedia.org/wiki/Malaysia_Airlines_Flight_17#Conspiracy_theories)

160[^4]: [Chinese newspaper spreading disinfo](https://twitter.com/gdead/status/1171032265629032450)

161[^5]: Use an adblocker before clicking [this](https://www.news18.com/news/tech/fake-whatsapp-message-of-child-kidnaps-causing-mob-violence-in-madhya-pradesh-2252015.html).